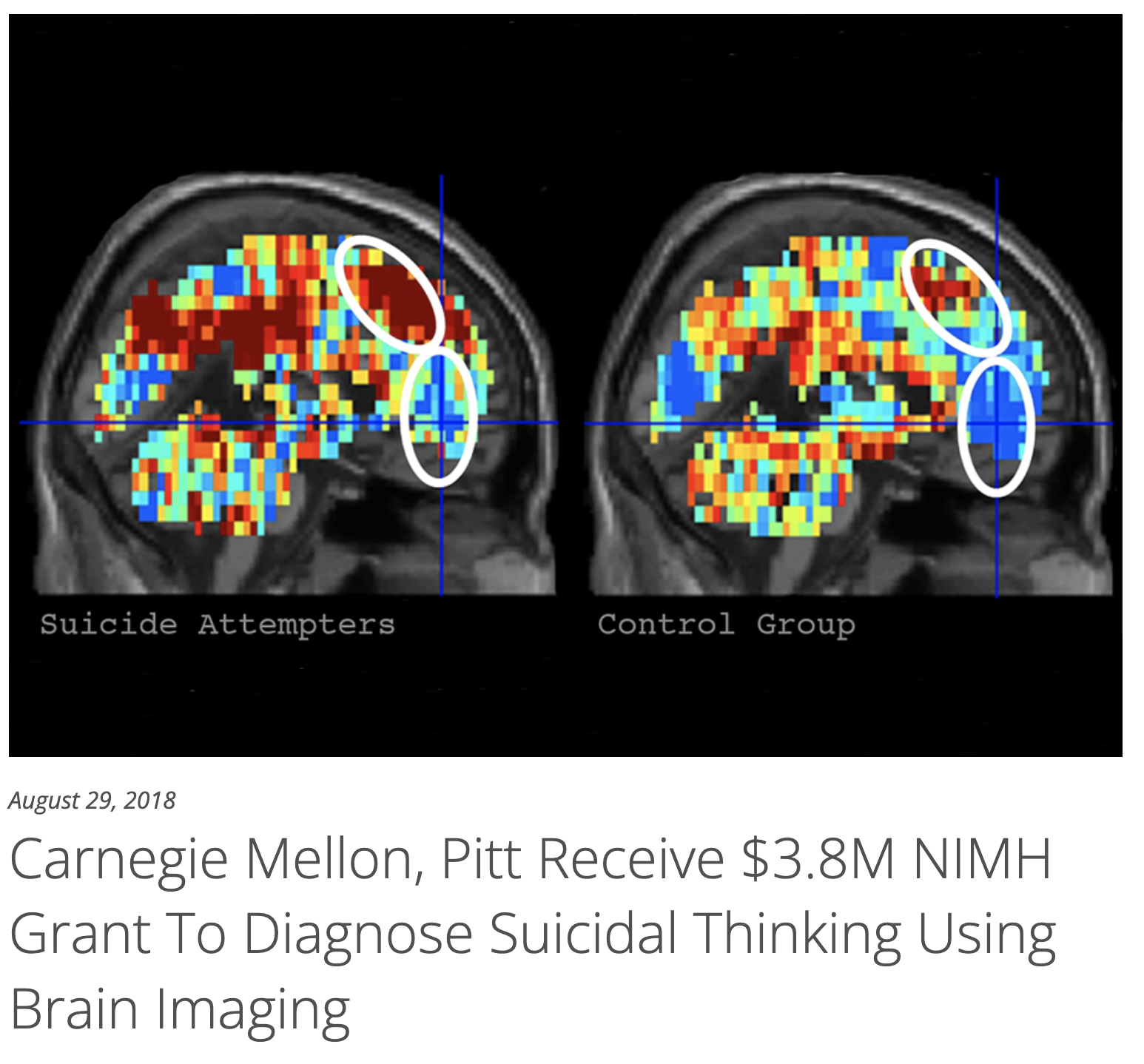

Cost effectiveness is an interesting issue. Clearly we aren't there yet, but I also think people believe MRI is more expensive than it really is. Right now, I pay $480/hour for a 3T scanner. I'd ballpark their task at ~ 30 minutes, even including a structural for registration. Would almost certainly be upcharged in a clinical context, but with tech advances its not entirely unreasonable that could drop into a range of cost effectiveness given we are dealing with rare, hard-to-predict events with catastrophic consequences. Not clear who could read the scan (psychiatry? neuroradiology? the IT guy who runs the machine learning algorithm? We're in uncharted territory here...). Definitely worth thinking about, but I also think it would be silly to stop research on this topic just because its not cost effective at this time.