Wonder about your thoughts on this paper, summed up in the NY Times. My personal take is that while AI is likely good for some things, it creates it's own errors, and AI + radiology eyes is going to emerge as the gold standard.

Also, the level of discussion you guys have about this is so much higher than the article it makes me wonder if the authors ever reach out to you?

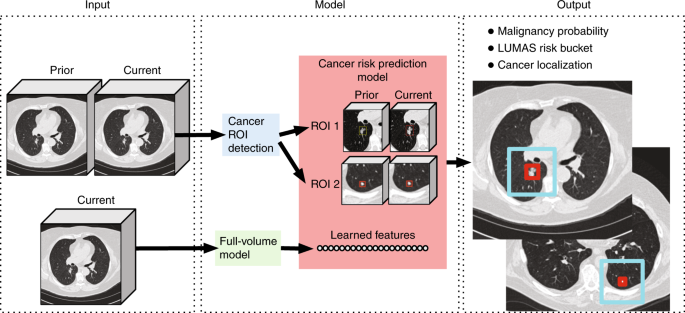

Here is the original paper by Google in Nature:

International evaluation of an AI system for breast cancer screening

To the NYTimes' credit, they quoted one radiologist, Dr. Lehman (as well as one of the co-authors, Dr. Etemadi, who is an anesthesia resident?). Dr. Lehman has a generally bullish long-term outlook on AI for radiology and gives a measured take on the paper that I think is on point. Several points are worth emphasis.

There can be a disconnect between a laboratory study and clinical utility. In the mammography world, the CAD (computer-aided detection) systems developed in the 1990s was approved based on 'reader studies' with ROC curves showing that CAD 'outperformed' the average radiologist, just like the current paper. Fast forward to present day to see what the reality is. We have new technologies like tomosynthesis that improve human performance but that the approved CAD technology can't utilize. We have filmless mammograms in PACS systems making it easy to compare with tons of prior studies but CAD can't integrate either. Radiologists have to take extra time in each study to review the CAD findings and dismiss them as irrelevant, but at least radiologists get paid more to take this extra time. CAD makers have raked in the dough too. The cost to society in the US is $400 million a year. However, diagnostic performance in the contemporary digital mammography screening setting is not clearly superior with CAD than without CAD. This conclusion is drawn in a study by Dr. Lehman published in JAMA IM:

Diagnostic Accuracy of Digital Screening Mammography With and Without Computer-Aided Detection. - PubMed - NCBI. CAD provides a cautionary tale: these lay headline-making studies about AI should be looked at like preclinical animal studies in the drug development world. The clinical utility remains unproven. Moreover, even when they are proven at one point in time, parallel advances in other technology can render it less useful in the near future.

Let's examine the details of the Google study and how they relate to clinical reality.

In the FIRST part of the study that contributes to the lay headline claim that AI outperforms radiologists, the authors looked at the performance of the AI system, trained on mammograms from the UK/NHS, on predicting cancer in mammograms from Northwestern Medicine in the US. For this test set, they defined radiologist performance using the original radiology reports produced in routine clinical practice (a patient that was recalled for additional diagnostic evaluation (BI-RADS 0) is a positive call). There are two tricks to be aware of:

FIRST, they defined ground truth as having a breast cancer diagnosis made within the next 27 months after the mammogram. They justify this because they want to mitigate the gatekeeper bias: biopsies are only triggered based on radiological suspicion. They defined the follow-up interval to include the next screening exam (they say 2 years) plus three months (time to biopsy). The problem is 27 months is a long time. Different organizations disagree as to what is the most cost-effective screening interval (USPSTF recommends biennial), but the American College of Radiology and the American Cancer Society recommend annual screening because cancer can grow quickly and more frequent screening saves slightly more lives. I suspect annual screening is the more common practice in the US dataset, as it is at my institution - look at the Nature paper Extended Data Figure 4: the calculated sensitivity drops much more when the follow-up interval goes past the 1 year threshold compared to how much it drops moving past the 2 year threshold. If someone is diagnosed with breast cancer after a negative mammogram, it's either because the cancer was visible but missed by the radiologist, the cancer is present but not visible by mammogram, or the cancer developed after the mammogram. As the followup interval gets longer, it's increasingly likely to be the latter (that it was not diagnosable at that original time) than the former (that it was missed). In retrospective reviews, the proportion of 'interval cancers' (not detected by screening, diagnosed before the next screen) that are truly missed cases (false-negatives) are estimated to be a minority (20-25%). (see

The epidemiology, radiology and biological characteristics of interval breast cancers in population mammography screening). Thus, with the longer follow-up interval definition, radiologists' sensitivity is lower than conventional benchmarks (87% sensitivity with 1 year follow-up, see benchmarking study by Dr. Lehman:

https://www.ncbi.nlm.nih.gov.pubmed/27918707) because more of the cancers that these patients get later are not even present at the time of screening! In the setting of annual screening in the US, I think a 15 month follow-up is a more reasonable definition that still reduces gatekeeper bias.

OK so sensitivity is artificially lower because these cancers found on follow-up don't exist yet - but AI is still out-diagnosing humans on the cancers that do exist at the time, right? Not definitely. AI can outperform in one of a few ways: (1) it can find a cancer that is visible to the radiologist but the radiologist missed, or (2) it can find a cancer that is invisible to the radiologist; these are useful (1 leads to biopsy, 2 leads to a different imaging modality to try to localize a lesion). However, there is another possibility that must be considered: (3) it can predict the future development of cancer even though none exists at this time. Dr. Lehman has shown that deep learning on mammograms predicts

5-year risk of breast cancer with an AUC of 0.68, which is as good or better than the risk scores that breast specialists commonly use based on factors like family history, hormone exposure, age, breast density, etc.

A Deep Learning Mammography-based Model for Improved Breast Cancer Risk Prediction. - PubMed - NCBI Note the AUC of the Google AI on the US test set for

2-year risk of breast cancer was 0.76. Risk prediction is useful in its own way, but it's not the same as making a cancer diagnosis. There's no lesion to directly sample, leaving considerable uncertainty - the AUC is not 1. There's no spot that someone can cut out or irradiate right now. The risk may be multifactorial, with some modifiable and some nonmodifiable risks - maybe the patient is smoking and the AI sees vascular calcifications, or maybe the normal breast tissue shows changes due to a hormonal exposure that also increases cancer risk, or the breast shows changes of prior radiation therapy to the chest. It's hard to conclude what to do other than perhaps screen for cancer more often. I don't think people see the value proposition of mammography plus AI as population-based

risk assessment. Screening mammography already has enough haters from the Gil Welch et al. school of public health thought.

SECOND, the US dataset was enriched in a particular way to help statistical power, but that affects performance measures. Whereas the training set from the UK was a random sample of a screening population, the test set from the US was constructed using screening mammograms from all the women who had biopsies at Northwestern in a certain time period, plus a random sample of the unbiopsied screening population. This means the dataset is enriched for people who have biopsies. People who have biopsies are of two sorts: screening detected abnormalities and clinically detected abnormalities. Via the first pathway, the screening mammograms and subsequent diagnostic mammogram and/or ultrasound had an abnormality that looks suspicious for cancer. Most of these turn out not to be cancer - per the benchmarks linked above, the so-called positive predictive value (PPV) 2 is around 25-30%, with the "acceptable range" being 20-40%. Because of this class imbalance, there is preferential enrichment for hard cases that are false positives - things that look like cancer but turned out not to be. There is a lower proportion of easy true negative cases, which were never biopsied. The specificity will be lower. Via the second pathway, clinically detected abnormalities were either missed or developed after the screener and the patient presented for other reasons (patient or doctor felt a lump, or they had focal pain, or they had metastatic disease detected some other way, and this led to a diagnostic cascade culminating in a tissue diagnosis of breast cancer). That means there is enrichment for hard cases that are easy to miss or not possible to diagnose - false negatives. The sensitivity will be lower. These are called spectrum effects, or spectrum bias. The magnitude of these effects is hard to say, and the authors try to de-bias the effects of undersampling normals on the absolute performance metrics using inverse probability weighting. However, the point remains that you're comparing AI against radiologist selectively on the cases that the radiologists found hard -- it's not a fair playing field. If in a parallel universe AI were the one rendering solo interpretation and gatekeeping biopsies, it's conceivable that you could see that human outdiagnoses AI on the cases on which AI had the most trouble.

In the SECOND part of the study that contributes to the lay headline claim that AI outperforms radiologists, Google got an external firm to do a reader study involving six radiologists, four of whom are not breast fellowship-trained but are probably general radiologists who read mammograms some of the time. These radiologists were asked to read 500 mammograms from the US data set, again with enrichment to ensure statistical power: 25% biopsy-proven cancer, 25% biopsy-proven not-cancer, 50% not biopsied. I've already mentioned how enriching for hard-for-human cases (here, the biopsy-proven not-cancer) makes it hard for humans. In this situation, being a prospective study, a different sort of spectrum bias also becomes relevant: changing prevalence changes the behavior of the readers. When prevalence is higher, readers should be more aggressive. When radiologists read screeners in routine practice, in the back of their mind is a target abnormal interpretation rate (recalls from screening) of ~10%. That benchmark is the first step in a series of steps towards getting a reasonable performance measure for cancer detection. That benchmark is dependent on the population incidence of breast cancer, which is 0.6% in a screening population. All that calibration goes out the window in a simulation in which 50% of the cases are abnormal, half of which are cancer and half of which are not. To top it off, the readers were not informed of the enrichment levels! Mathematically, changing prevalence should not perturb the AUC, but it certainly changes the mindset of the reader. My confidence would be shaken if I found myself 10 stacks of 10 screening mammograms deep and I've already called back 50 studies rather than a more typical 10.

You get the feeling something is weird when you see that performance of the six readers averaged when using the traditional 1 year follow up definition is only like sensitivity 60% and specificity 75% (Fig 3c).

Last point:

In the real world, initially, radiologists will use AI as a tool rather than be replaced altogether by AI. No tech company will wholly assume the liability risk of misses in a cancer screening program. Repeating the above:

As said above, even with the most basic tasks and biased studies, none have shown that AI alone is better than AI+Radiologist (i.e. AI being a tool). In a real-world setting it must have general intelligence capabilities, something we are far away from.

Until we have a clinical trial showing that performance is superior for AI alone over AI+Radiologist, I do not think AI will be doing anything alone, at least in a country that is not resource-poor. Dr. Lehman, as paraphrased in the NYT, seems to think eventually computers will render the sole interpretation for some mammograms. The key word is 'eventually.' The timeline is uncertain - nobody can predict the future - but I think progress in the highly regulated and litigious field of medicine tends to be incremental, not disruptive, and the radiology workforce will have time to adapt to practice-changing technology, as it has plenty of times in the past.