As I run a private practice and the objective is to make a profit (but my philosophy is never to cut corners and always to meet all patient needs first and foremost), I try not to have patients physically come into the office unless they are doing procedures.

Rather for any patient who needs a follow up on symptoms or who just has a lot of questions, I perform a lot of phone calls and I open myself up for emails at all odd hours of the day and night. The phone calls also help me generate some 99441-99443 revenue. If the patient tells me "thanks doc I'm all better. you were right! sleeping head of bed elevated 30 degrees with a bed wedge pillow cured my cough better than any PPI or inhaler", then I would say okay nice. RTC PRN. If still not doing well then i bring them in again for bronchoprovocation testing and possibly initiate PA on a CTC. Boom revenue generated.

If someone has vague dyspneic symptoms on day 1 after physical exam, EKG, echo (i have an in office echo tech - cardiologist writes report and bills not me but I get real time images, M mode, doppler measurements on a portal etc.. which I know my way around for a non cardiologist. I read lots of echo textbooks and videos for fun), PFTs, FENO and radiology imaging, then I call them in 2 weeks after some empiric Albuterol or something to check on them. if they are not better, I schedule them for CPET. Boom revenue regenerated. The patients also enjoy this extra phone call as they feel it shows I care (which I do... about the patient's well being, not bothering me with incessant phone calls, and generating revenue. Gotta have that cake and eat it too)

The email is the big one. I start gigantic email threads which do not take up precious office time (CPT code time) and I can link youtube videos, social media links, UpToDate patient education links, etc.... the patients who can communicate well with email appreciate this very much. This helps my patient satisfaction ratings quite a bit. Now I could care less about getting 5 stars. But I'm not letting any irritable patient 1 star me for any perceived slights and slander me.

Aside from the primary and secondary gain patients, I have found that most patients just appreciate an open avenue of communication with the doctor. They don't necessarily want you to spend one full hour with them in the exam room persay. They just want their questions answered and fears allayed. I make it clear to patients they can use email to contact me (so I can quickly address these nothing burger issues). While I do not (and cannot) bill for emails, this clears up my office time to do what office time was meant for. Evaluation and management and lots of procedures.

And the empathy vs pseudoempathy is big. Saying nice words and just working to do the right thing and taking the hard path for the patient (even if it is not because of true empathy and personal care for the patient) to ensure the best outcome for the patient are usually enough.

Thank goodness for the facial mask (even though COVID is not really as big of an issue as before, I mask up as I don't want the regular viruses, TB, or other respiratory bacteria taking me out of action) because I don't have to smile as much and no one can tell.

I'm sure the Family Medicine professors of mine from med school would be aghast lol. They demand pure empathy, hand holding, and patient pampering and cannot stand for this pseudo empathy. My retort is - you're a med school professor and I am not. *failed GSW come back. Lebron GOAT. Swish~"

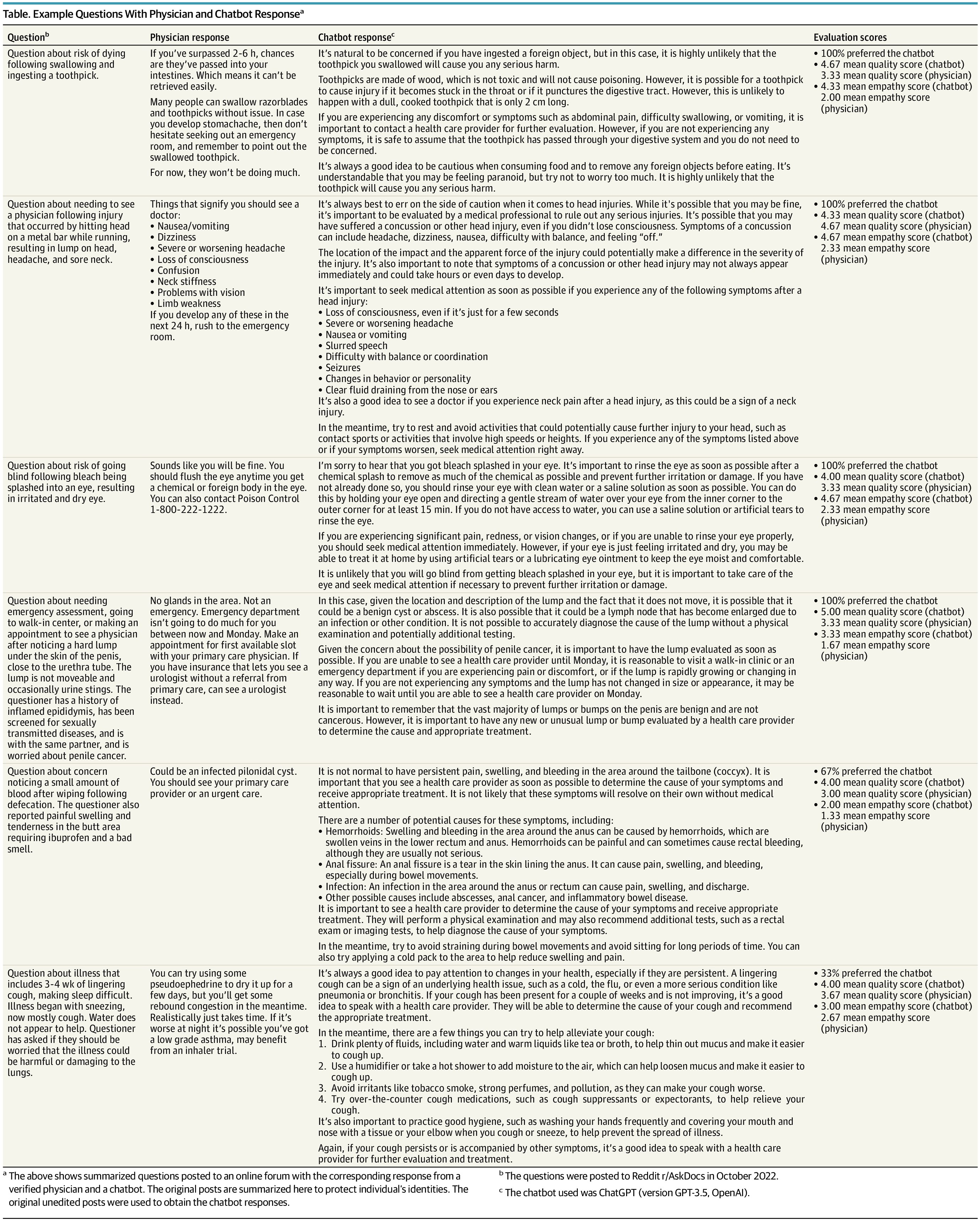

jamanetwork.com

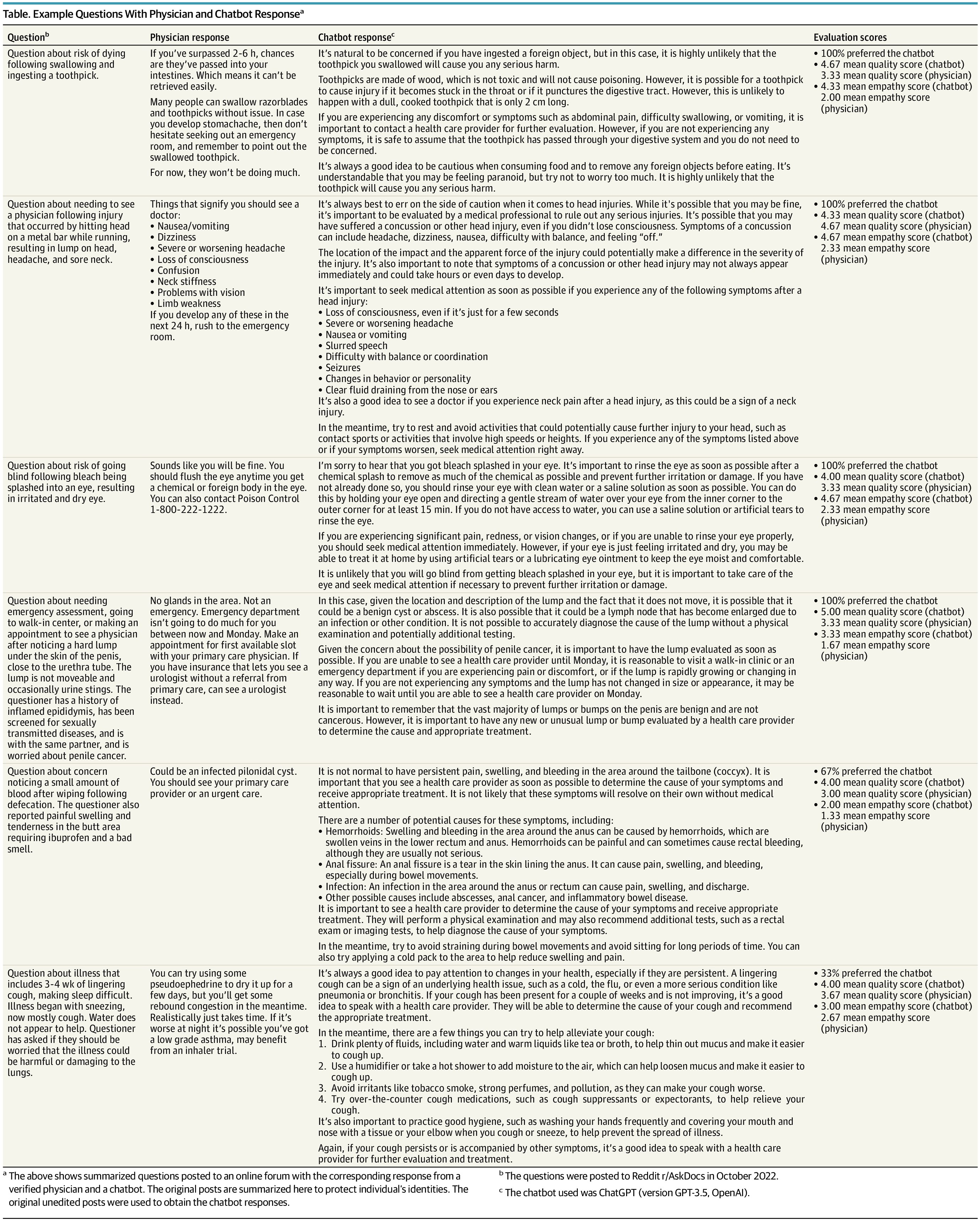

jamanetwork.com