I'm PGY-3 and matched to mid academic hematology/oncology fellowship and am excited about that. just curious what are your thoughts on AI's impact on the field of oncology. from my naive experience so far, i think AI can generate good or better treatment plan for most types of malignancy. if that is true, i would imagine the demand and salary for oncologists would go down?

- Forums

- Physician and Resident Communities (MD / DO)

- Internal Medicine and IM Subspecialties

- Hematology / Oncology

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The future of oncology, impacted by AI?

- Thread starter ypgg2008

- Start date

- Joined

- Jul 4, 2015

- Messages

- 193

- Reaction score

- 162

As someone who has tried to incorporate predictive analytics into healthcare, and a general advocate for AI, I don't think this will happen in our lifetimes, and I think that within 6 months of fellowship you'll realize the same. Even if we get to true generative AI, I don't think our field is at risk (as opposed to other more algorithmic fields like general medicine or even pathology / radiology). I can think of 4 reason off the top of my head, without even thinking that hard why this won't happen:

1. The issue with AI today is its tendency towards generative nonsense. If it doesn't know something it just makes something up. There's a looooooot of grey zone in oncology (just think of your standard adjuvant patient: Is the cancer there? Is it not? Are there micrometastases? Your guess is as good as mine) and that's why we have tumor board. Think of someone in tumor board just making random stuff up in the middle of tumor board. Even if this happens 1% of the time they'd lose credibility because the stakes are so high. People are less forgiving of AI.

2. Even the most up to date AI algorithms are about 1-2 years behind the available literature, just because it takes time to train an AI model. As you'll soon see, if you're even 6 months out of date in onc, you're *really* out of date

Now just the practical elements:

3. Believe me when I say this, NO AI COMPANY would want to take on the liability of the high stakes decisions involved in healthcare. Just imagine the headlines: "AI is the new death panel", "AI decides not to treat a patient post surgery and they met out", "AI kills frail elderly patients with chemotherapy". This goes back to point 1. *People are far less forgiving of AI* and AI companies obviously know this. That's why when you go into GPT 4 and type "hey I have a fever, cough, productive sputum, and I just french kissed someone someone with COVID. Do I have Covid?" It'll spit out "probably but check with a doctor first". For better or for worse we'll be the final say for the foreseeable future for practical, financial (cough legal dumping ground) reasons. OpenAI is struggling to turn a profit as it is without dumping 2x their net worth into legal fees. If you don't believe in the limitations of technology, at least believe in capitalism.

4. Palliative discussions. Enough said. I wouldn't want an AI telling grandma to go on hospice. Would you?

That said I actually welcome AI as a tool for outlining therapeutic options and spitting out evidence. That'd make my job so much easier. If anything I HOPE AI comes to onc because it can only make general oncology more feasible.

1. The issue with AI today is its tendency towards generative nonsense. If it doesn't know something it just makes something up. There's a looooooot of grey zone in oncology (just think of your standard adjuvant patient: Is the cancer there? Is it not? Are there micrometastases? Your guess is as good as mine) and that's why we have tumor board. Think of someone in tumor board just making random stuff up in the middle of tumor board. Even if this happens 1% of the time they'd lose credibility because the stakes are so high. People are less forgiving of AI.

2. Even the most up to date AI algorithms are about 1-2 years behind the available literature, just because it takes time to train an AI model. As you'll soon see, if you're even 6 months out of date in onc, you're *really* out of date

Now just the practical elements:

3. Believe me when I say this, NO AI COMPANY would want to take on the liability of the high stakes decisions involved in healthcare. Just imagine the headlines: "AI is the new death panel", "AI decides not to treat a patient post surgery and they met out", "AI kills frail elderly patients with chemotherapy". This goes back to point 1. *People are far less forgiving of AI* and AI companies obviously know this. That's why when you go into GPT 4 and type "hey I have a fever, cough, productive sputum, and I just french kissed someone someone with COVID. Do I have Covid?" It'll spit out "probably but check with a doctor first". For better or for worse we'll be the final say for the foreseeable future for practical, financial (cough legal dumping ground) reasons. OpenAI is struggling to turn a profit as it is without dumping 2x their net worth into legal fees. If you don't believe in the limitations of technology, at least believe in capitalism.

4. Palliative discussions. Enough said. I wouldn't want an AI telling grandma to go on hospice. Would you?

That said I actually welcome AI as a tool for outlining therapeutic options and spitting out evidence. That'd make my job so much easier. If anything I HOPE AI comes to onc because it can only make general oncology more feasible.

- Joined

- Jun 23, 2019

- Messages

- 1,045

- Reaction score

- 1,825

What makes you think AI could impact Oncology and not also impact Cardiology, Endocrinology, Nephrology, and basically any other non-procedural specialty? If your answer is “because Oncology is Algorithmic and other fields aren’t” then I’d say just wait about 12 months and come revisit that thought.I'm PGY-3 and matched to mid academic hematology/oncology fellowship and am excited about that. just curious what are your thoughts on AI's impact on the field of oncology. from my naive experience so far, i think AI can generate good or better treatment plan for most types of malignancy. if that is true, i would imagine the demand and salary for oncologists would go down?

The real threat to Oncology is similar to all other fields: over-expansion (I think we’ve increased spots by 25% in the past 4-5 years) and payment reform.

- Joined

- Mar 6, 2005

- Messages

- 21,378

- Reaction score

- 17,914

Then how come I can't get anybody to come out here and work with me? I pay well and I'm awesome!What makes you think AI could impact Oncology and not also impact Cardiology, Endocrinology, Nephrology, and basically any other non-procedural specialty? If your answer is “because Oncology is Algorithmic and other fields aren’t” then I’d say just wait about 12 months and come revisit that thought.

The real threat to Oncology is similar to all other fields: over-expansion (I think we’ve increased spots by 25% in the past 4-5 years) and payment reform.

- Joined

- Mar 6, 2005

- Messages

- 21,378

- Reaction score

- 17,914

@ShuperNewbie gave a pretty nice rebuttal to this already, but I'm curious if you can expand on what you mean by this. I appreciate that your POV is naive (and you recognizing that), and I'm not trying to be antagonistic here. I really do want to understand what your concern is in this scenario.I'm PGY-3 and matched to mid academic hematology/oncology fellowship and am excited about that. just curious what are your thoughts on AI's impact on the field of oncology. from my naive experience so far, i think AI can generate good or better treatment plan for most types of malignancy. if that is true, i would imagine the demand and salary for oncologists would go down?

I remember an old SDN thread about this ~2 years ago where we hashed out a lot of this:

forums.studentdoctor.net

forums.studentdoctor.net

Particularly the wonderful Ars Brevis article: https://ascopubs.org/doi/full/10.1200/JCO.2013.49.0235 (from 2013!) which imagines a world where your assumption that AI can generate as good or better treatment plan is true

Notably, this was before everyone started to get very hyped about generative AI, but still very relevant.

Curious about threats.

Was reading some stuff on here cuz bored, and came across things like AI developing treatment algorithms while someone just okays it etc. So, as someone interested in heme-onc, how can I be convinced that AI is not a threat; for example, what aspects of the specialty make it so AI is not a threat?

Particularly the wonderful Ars Brevis article: https://ascopubs.org/doi/full/10.1200/JCO.2013.49.0235 (from 2013!) which imagines a world where your assumption that AI can generate as good or better treatment plan is true

Notably, this was before everyone started to get very hyped about generative AI, but still very relevant.

- Joined

- Mar 13, 2020

- Messages

- 90

- Reaction score

- 103

Spots have increased from 424 in 2008 to 663 in 2022, an increase of 44% (note the US pop increased by 16% in this time period and is aging).What makes you think AI could impact Oncology and not also impact Cardiology, Endocrinology, Nephrology, and basically any other non-procedural specialty? If your answer is “because Oncology is Algorithmic and other fields aren’t” then I’d say just wait about 12 months and come revisit that thought.

The real threat to Oncology is similar to all other fields: over-expansion (I think we’ve increased spots by 25% in the past 4-5 years) and payment reform.

Compare to other specialties (2017-2021):

Anesthesiology: 1,202 -> 1,460 (21.5% increase)

Emergency Medicine: 2,047 -> 2,840 (38.7% increase)

Family Medicine: 3,356 -> 4,823 (43.7% increase)

Internal Medicine: 7,233 -> 9,024 (24.7% increase)

Neurology: 492 -> 715 (45.3% increase)

Oncology: 549 -> 638 (14% increase)

Based on the data, it looks like over-expansion actually isn't a big issue in onc, or at least not as much compared to many other specialties. Curious to hear everyone else's thoughts since this is not something I've heard brought up before, as I was under the impression that there was a hem-onc shortage

Last edited:

- Joined

- Jun 23, 2019

- Messages

- 1,045

- Reaction score

- 1,825

I’m not saying it is a big threat, but I worry more about that and payment reform than our AI overlords.Spots have increased from 424 in 2008 to 663 in 2022, an increase of 44% (note the US pop increased by 16% in this time period and is aging).

Compare to other specialties (2017-2021):

Anesthesiology: 1,202 -> 1,460 (21.5% increase)

Emergency Medicine: 2,047 -> 2,840 (38.7% increase)

Family Medicine: 3,356 -> 4,823 (43.7% increase)

Internal Medicine: 7,233 -> 9,024 (24.7% increase)

Neurology: 492 -> 715 (45.3% increase)

Oncology: 549 -> 638 (14% increase)

Based on the data, it looks like over-expansion actually isn't a big issue in onc, or at least not as much compared to many other specialties. Curious to hear everyone else's thoughts since this is not something I've heard brought up before, as I was under the impression that there was a hem-onc shortage

- Joined

- Mar 13, 2020

- Messages

- 90

- Reaction score

- 103

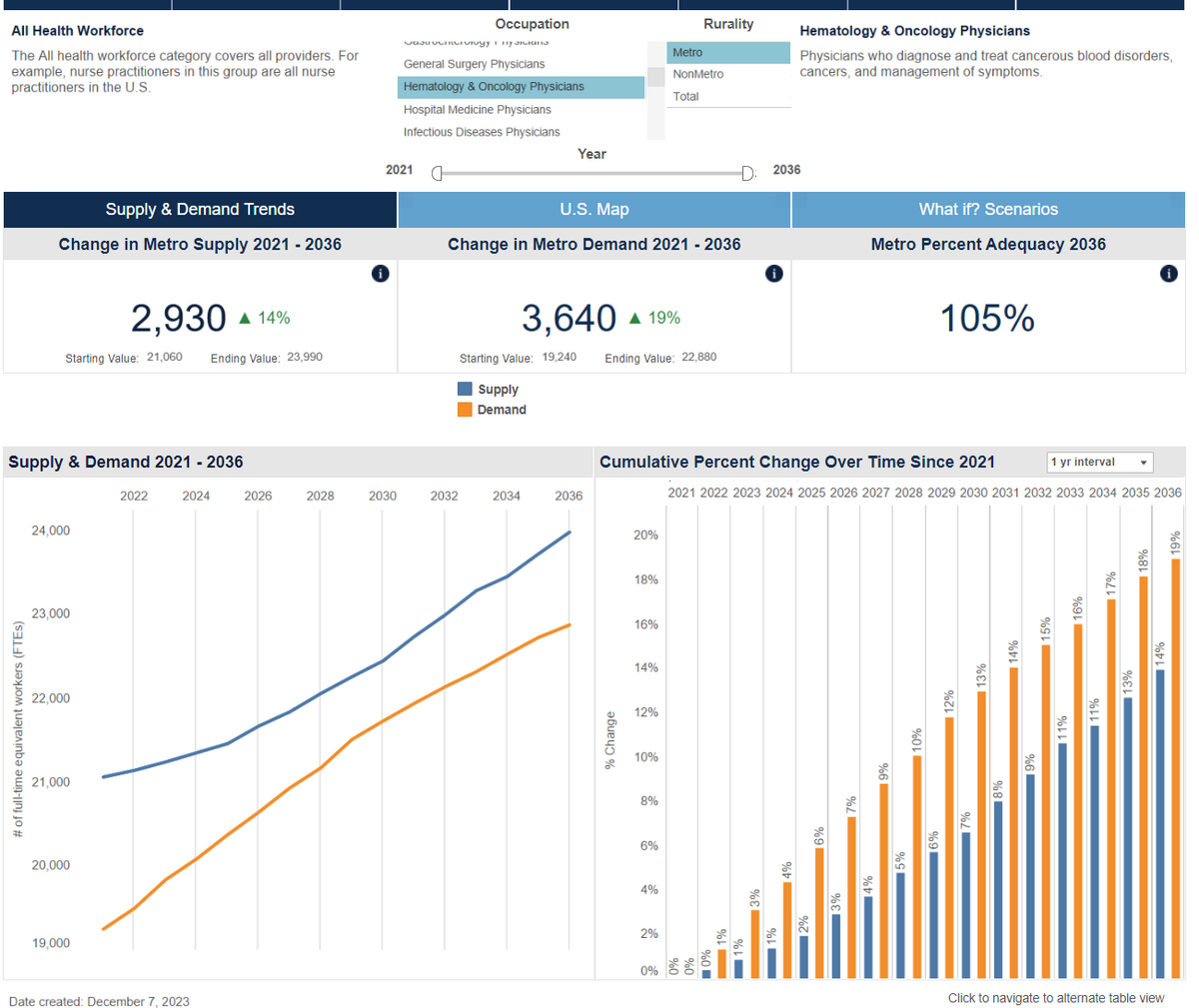

On this topic, I came across this government workforce projection data recently. It allows you to break the projection down by specialty. Looks like hem-onc currently has an oversupply of about 2000 physicians in metro areas. Projected out to 2032, that gap will decrease to 800, but the projection shows a metro-oversupply for every year.

(of note, this is the case for almost every specialty other than surgical subspecialties and cards)

data.hrsa.gov

data.hrsa.gov

(of note, this is the case for almost every specialty other than surgical subspecialties and cards)

Workforce Projections

- Joined

- May 16, 2019

- Messages

- 39

- Reaction score

- 8

I agree the onc pool is expanded given what we can do/offer as compare to even 3-5 yrs agoSpots have increased from 424 in 2008 to 663 in 2022, an increase of 44% (note the US pop increased by 16% in this time period and is aging).

Compare to other specialties (2017-2021):

Anesthesiology: 1,202 -> 1,460 (21.5% increase)

Emergency Medicine: 2,047 -> 2,840 (38.7% increase)

Family Medicine: 3,356 -> 4,823 (43.7% increase)

Internal Medicine: 7,233 -> 9,024 (24.7% increase)

Neurology: 492 -> 715 (45.3% increase)

Oncology: 549 -> 638 (14% increase)

Based on the data, it looks like over-expansion actually isn't a big issue in onc, or at least not as much compared to many other specialties. Curious to hear everyone else's thoughts since this is not something I've heard brought up before, as I was under the impression that there was a hem-onc shortage

- Joined

- Mar 6, 2005

- Messages

- 21,378

- Reaction score

- 17,914

This timely article from JAMA popped into my Inbox today. Not oncology specific by any means, but rather than a bunch of internet randos (like me) spouting off on things we may or may not have any actual knowledge of, it was written by a medical informaticist (and hospitalist) at UCSF and an AI researcher at Stanford, so worth considering as a "Category 2B" recommendation per NCCN guidelines.

I'll also note that it reiterates a lot of things that have been said here already (hooray for internet randos!) noting that there are a lot of easy wins for AI in medicine (scribing, prior auth/appeals, billing, scheduling, etc) that are likely to be implemented relatively quickly. But that any meaningful impacts on what we consider "patient care" are going to be quite a bit further down the road. The legal, regulatory, privacy, "Six Sigma" and other concerns inherent in healthcare are actually going to be much larger barriers to AI in medicine that the technology, or even it's adoption by physicians and healthcare systems, will be.

I can definitely see some impressive inroads in decision support tools in the short term as well. My prior employer used (and was instrumental in creating) what is now the Elsevier Clinical Pathways program. I spent (and still do as my current employer is also a user) a lot of time working with them on creating, maintaining and updating the pathways. I think it's a very useful tool but requires far too much human interfacing to maintain and improve. AI would definitely be a huge benefit in a setting like that.

I just finished reading a New Yorker profile/interview of Jensen Huang, the founder and CEO of Nvidia, which morphed from a gaming chip company to an AI company that is currently the Tesla/Microsoft/Amazon/Walmart combined of AI computing power. The article was an interesting bit of insight into the business of AI that I certainly wasn't aware of. What a lot of people talk about as the advantage of AI is not so much the work it can do, but the cost savings of the work it does. We've known for some time how much energy generative AI uses (ChatGPT-4 by itself consumes the same energy as 33K US households daily), but I wasn't aware of what the hardware cost on top of that. Nvidia's flagship A100 machine goes for ~$500K a box. ChatGPT4 by itself was created (and is maintained) using ~25,000 of them, which, at retail (which OpenAI clearly didn't pay) is $12.5 motherf***** TRILLION(...with a T) for hardware alone. The entire US government budget for FY22 was "only" $6.5T and the US GDP for FY22 was ~$25T, so it's hard to imagine sustaining half of the US GDP on AI alone for even a very short period of time.

On a related note, a surgeon colleague of mine called me to talk through a few cases this morning and we wound up talking about AI in medicine. He said that the first time an AI bot starts screaming at a patient and storms out of the room for their circular logic and asking the same questions over and over again (which, let's be honest, is probably a pretty common part of most of our days in clinic) will be the end of AI in patient facing clinical medicine.

I'll also note that it reiterates a lot of things that have been said here already (hooray for internet randos!) noting that there are a lot of easy wins for AI in medicine (scribing, prior auth/appeals, billing, scheduling, etc) that are likely to be implemented relatively quickly. But that any meaningful impacts on what we consider "patient care" are going to be quite a bit further down the road. The legal, regulatory, privacy, "Six Sigma" and other concerns inherent in healthcare are actually going to be much larger barriers to AI in medicine that the technology, or even it's adoption by physicians and healthcare systems, will be.

I can definitely see some impressive inroads in decision support tools in the short term as well. My prior employer used (and was instrumental in creating) what is now the Elsevier Clinical Pathways program. I spent (and still do as my current employer is also a user) a lot of time working with them on creating, maintaining and updating the pathways. I think it's a very useful tool but requires far too much human interfacing to maintain and improve. AI would definitely be a huge benefit in a setting like that.

I just finished reading a New Yorker profile/interview of Jensen Huang, the founder and CEO of Nvidia, which morphed from a gaming chip company to an AI company that is currently the Tesla/Microsoft/Amazon/Walmart combined of AI computing power. The article was an interesting bit of insight into the business of AI that I certainly wasn't aware of. What a lot of people talk about as the advantage of AI is not so much the work it can do, but the cost savings of the work it does. We've known for some time how much energy generative AI uses (ChatGPT-4 by itself consumes the same energy as 33K US households daily), but I wasn't aware of what the hardware cost on top of that. Nvidia's flagship A100 machine goes for ~$500K a box. ChatGPT4 by itself was created (and is maintained) using ~25,000 of them, which, at retail (which OpenAI clearly didn't pay) is $12.5 motherf***** TRILLION(...with a T) for hardware alone. The entire US government budget for FY22 was "only" $6.5T and the US GDP for FY22 was ~$25T, so it's hard to imagine sustaining half of the US GDP on AI alone for even a very short period of time.

On a related note, a surgeon colleague of mine called me to talk through a few cases this morning and we wound up talking about AI in medicine. He said that the first time an AI bot starts screaming at a patient and storms out of the room for their circular logic and asking the same questions over and over again (which, let's be honest, is probably a pretty common part of most of our days in clinic) will be the end of AI in patient facing clinical medicine.

- Joined

- Mar 6, 2005

- Messages

- 21,378

- Reaction score

- 17,914

Bummed that the OP hasn't been back to further the discussion, but apparently JAMA is doing a whole series on AI in medicine right now.

This week's installment is an interview with John Ayers, an AI and public health researcher at UCSD who was the lead author on that article from earlier this year that said that Redditors preferred ChatGPT 2.5 to actual physician responses to their questions.

This week's installment is an interview with John Ayers, an AI and public health researcher at UCSD who was the lead author on that article from earlier this year that said that Redditors preferred ChatGPT 2.5 to actual physician responses to their questions.

- Joined

- Jun 23, 2019

- Messages

- 1,045

- Reaction score

- 1,825

Can’t wait for it to be part of meaningful useBummed that the OP hasn't been back to further the discussion, but apparently JAMA is doing a whole series on AI in medicine right now.

This week's installment is an interview with John Ayers, an AI and public health researcher at UCSD who was the lead author on that article from earlier this year that said that Redditors preferred ChatGPT 2.5 to actual physician responses to their questions.

- Joined

- Mar 6, 2005

- Messages

- 21,378

- Reaction score

- 17,914

I thought they got rid of meaningless use? Or do I just ignore it and nobody GAF anymore? I'm cool either way.Can’t wait for it to be part of meaningful use

- Joined

- Jul 4, 2015

- Messages

- 193

- Reaction score

- 162

I'm actually pretty excited for the use of AI in healthcare actually. For more serious medical issues like cancer, AI, even true generative AI, will not be able to replace us in our lifetimes (it's interesting because software engineers are worried that that AI might take them over haha. Frankenstein's monster engineering Frankenstein).

That said I'm interested in ways that AI can make our job easier. One big pet peeve that I have is when people like John Ayers take qualitative research and draw conclusions that these studies weren't ever meant to answer (a BIGGER pet peeve I have is when qualitative research itself doesn't acknowledge that there are conclusions that aren't meant to be drawn from the study, like in the aforementioned JAMA article, but that's a topic for another time). The JAMA AI study doesn't really show anything other than maybe, mayyyyyybe AI can type forum messages better than trained doctors who are typing in a hurry and not spell checking their posts, but other than that, it doesn't show much. SO much of communication gets lost in type (like sarcasm for example) that this study isn't even a decent measure of communication.

That said it'd be cool to see if AI could decrease phone triage burden for us. I doubt it'll be able to do this effectively (think of all those automated phone messages that you just outright ignore when it asks "have you tried XYZ first before I connect you to a human being") and I'm not super sure if it'll cut down on my workload (it's not really cutting my work down if I have to proofread everything it types) but it's a step in the right direction.

I would love to see AI try to help us with predictive analytics (e.g. which of these patients will do better with adjuvant therapy, like an oncotype but with clinical predictors and for other disease sites), but this might also be a tall order since 1. the base studies haven't been done yet so AI has nothing to train on, and when the base studies are completed then I don't really need AI to help me make a decision and 2. people are really complicated and AI has trouble with these complex systems. To point #2 I once tried to implement a predictive analytic tool to predict mortality in a very large hospital, but it ran into a lot of issues. As an example of this complexity, it turned out that it was really really hard to get the model to stop predicting someone was going to die if they had stable tachycardia from uncomplicated a-fib. We couldn't tell it to stop paying attention to tachycardia, we couldn't tell it to stop paying attention to irregular rhythms, we couldn't tell it to stop paying attention to tachycardia if pressures were stable, we couldn't tell it to stop paying attention to tachycarcia if it's occurred in the past... etc. etc. etc., and yet, knowing that a patient with uncomplicated a-fib isn't going to die overnight is something that an intern can tell you without any prompting. At it's core, AI as we have it now (at least anything before GPT-4) is just an association / pattern recognition tool. The tricky think is making sure its associations are accurate. I'm reminded of a story my friend at Waymo once told me, about how in the very early days of the model, it kept telling cars to make a right turn whenever it rained, and no one for a long time could figure out why it made that association. In healthcare though, the stakes are much higher.

That said I'm interested in ways that AI can make our job easier. One big pet peeve that I have is when people like John Ayers take qualitative research and draw conclusions that these studies weren't ever meant to answer (a BIGGER pet peeve I have is when qualitative research itself doesn't acknowledge that there are conclusions that aren't meant to be drawn from the study, like in the aforementioned JAMA article, but that's a topic for another time). The JAMA AI study doesn't really show anything other than maybe, mayyyyyybe AI can type forum messages better than trained doctors who are typing in a hurry and not spell checking their posts, but other than that, it doesn't show much. SO much of communication gets lost in type (like sarcasm for example) that this study isn't even a decent measure of communication.

That said it'd be cool to see if AI could decrease phone triage burden for us. I doubt it'll be able to do this effectively (think of all those automated phone messages that you just outright ignore when it asks "have you tried XYZ first before I connect you to a human being") and I'm not super sure if it'll cut down on my workload (it's not really cutting my work down if I have to proofread everything it types) but it's a step in the right direction.

I would love to see AI try to help us with predictive analytics (e.g. which of these patients will do better with adjuvant therapy, like an oncotype but with clinical predictors and for other disease sites), but this might also be a tall order since 1. the base studies haven't been done yet so AI has nothing to train on, and when the base studies are completed then I don't really need AI to help me make a decision and 2. people are really complicated and AI has trouble with these complex systems. To point #2 I once tried to implement a predictive analytic tool to predict mortality in a very large hospital, but it ran into a lot of issues. As an example of this complexity, it turned out that it was really really hard to get the model to stop predicting someone was going to die if they had stable tachycardia from uncomplicated a-fib. We couldn't tell it to stop paying attention to tachycardia, we couldn't tell it to stop paying attention to irregular rhythms, we couldn't tell it to stop paying attention to tachycardia if pressures were stable, we couldn't tell it to stop paying attention to tachycarcia if it's occurred in the past... etc. etc. etc., and yet, knowing that a patient with uncomplicated a-fib isn't going to die overnight is something that an intern can tell you without any prompting. At it's core, AI as we have it now (at least anything before GPT-4) is just an association / pattern recognition tool. The tricky think is making sure its associations are accurate. I'm reminded of a story my friend at Waymo once told me, about how in the very early days of the model, it kept telling cars to make a right turn whenever it rained, and no one for a long time could figure out why it made that association. In healthcare though, the stakes are much higher.

Well don't leave us hanging - what was the reason!?in the very early days of the model, it kept telling cars to make a right turn whenever it rained, and no one for a long time could figure out why it made that association

- Joined

- Jul 4, 2015

- Messages

- 193

- Reaction score

- 162

I would love to see AI try to help us with predictive analytics (e.g. which of these patients will do better with adjuvant therapy, like an oncotype but with clinical predictors and for other disease sites), but this might also be a tall order since 1. the base studies haven't been done yet so AI has nothing to train on, and when the base studies are completed then I don't really need AI to help me make a decision

And just a quick anecdote to this point above (I know... quoting myself is like kissing my own ass), I'm reminded of all the bright eyed and bushy tailed software engineers who would come us to us with models that were promised to help us diagnose complex disease and when we ran these models or diagnostic aids, we found that they were really good at..... diagnosing pneumonias... or UTIs.... or things like that.

But the thing is... I don't NEED AI's help to diagnose an pneumonia lol. I need it to help me tell the difference between pneumonia and pneumonitis, or to tell me whether someone has a rare vasculitis, but the studies in this vein haven't materialized yet and when they do, I won't really need AI to help me with that anymore. It's like someone trying to perfect the "Super Auto Treasure Digger 5000 Plus" but the tool is useless if you don't have a map to the treasure. The thing is, once we get the map to the treasure, I don't really need "Super Auto Treasure Digger 5000 Plus", my little shovel here works just fine thank you very much.

- Joined

- Jul 4, 2015

- Messages

- 193

- Reaction score

- 162

Haha. It was a nonsense association. And that's the scary thing about AI. Sometimes it'll make an association or do something and nobody not even the smartest AI researchers knows why for sure. In this case, when it rained traffic would build up, so maybe the model was just avoiding invisible traffic regardless of whether it truly existed. But these nonsense associations are one of the biggest issues in AI research.Well don't leave us hanging - what was the reason!?

Similar threads

- Replies

- 19

- Views

- 4K

- Replies

- 86

- Views

- 41K