- Joined

- May 24, 2016

- Messages

- 89

- Reaction score

- 174

Link to full article:

A study published Dec. 2015 at WashU found:

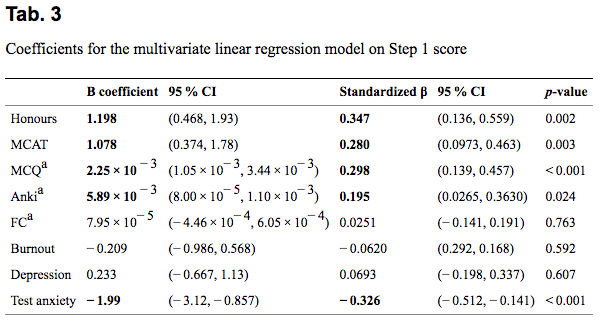

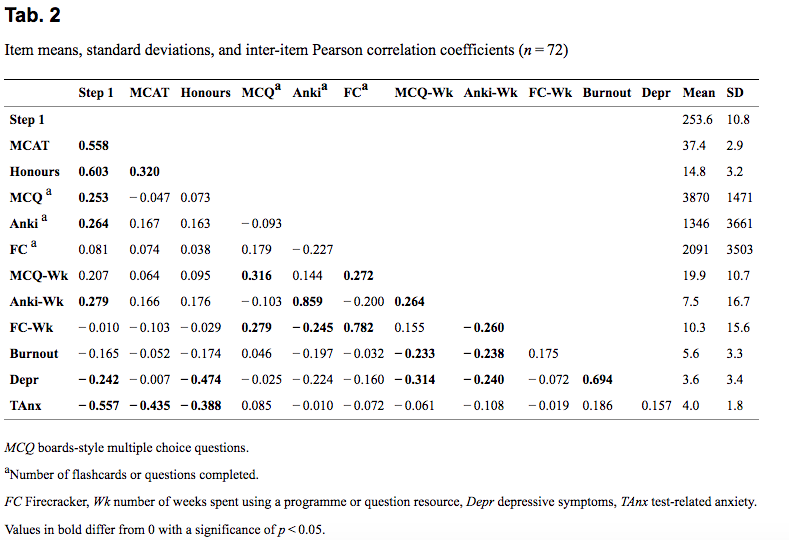

"The use of boards-style questions and Anki flashcards predicted performance on Step 1 in our multivariate model, while Firecracker use did not."

"Unique Firecracker flashcards seen did not predict Step 1 score. Each additional 445 boards-style practice questions or 1700 unique Anki flashcards was associated with one additional point on Step 1 when controlling for other academic and psychological factors."

"Students who complete more practice questions or flashcards may simply study more in general, though the lack of a benefit with Firecracker compared with Anki suggests against this confounder."

Tables:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4673073/

A study published Dec. 2015 at WashU found:

"The use of boards-style questions and Anki flashcards predicted performance on Step 1 in our multivariate model, while Firecracker use did not."

"Unique Firecracker flashcards seen did not predict Step 1 score. Each additional 445 boards-style practice questions or 1700 unique Anki flashcards was associated with one additional point on Step 1 when controlling for other academic and psychological factors."

"Students who complete more practice questions or flashcards may simply study more in general, though the lack of a benefit with Firecracker compared with Anki suggests against this confounder."

Tables:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4673073/