They become standard of care in reality when the FDA or EU approves them for clinical use, and then corporations or radiology groups use them in practice if they increase profit.

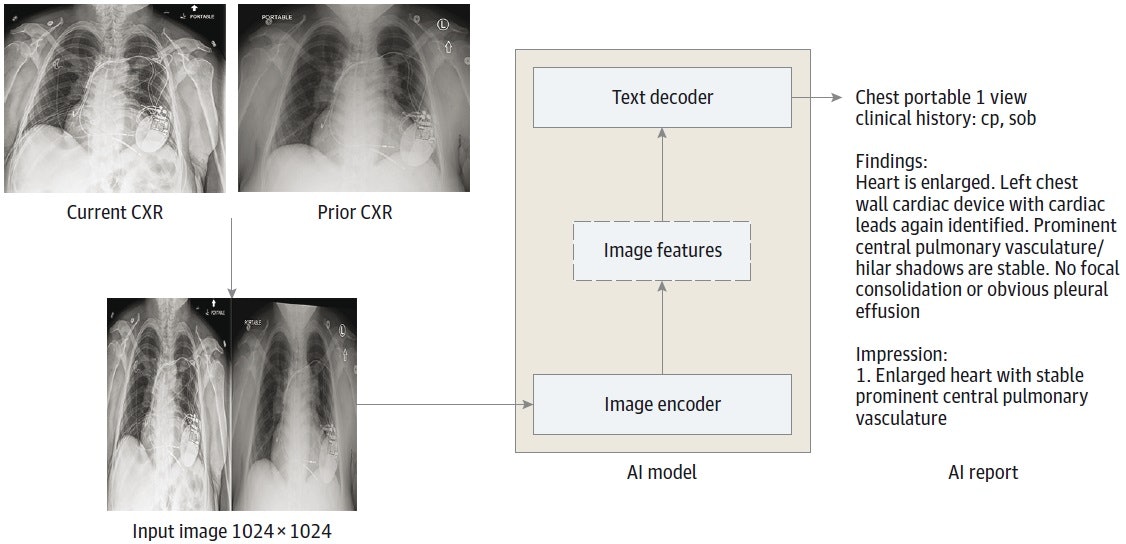

Oxipit's ChestLink software already has European Union regulatory approval to provide fully-autonomous reports on normal chest xrays. No radiologist involved.

Researchers in Finland conducted the first autonomous AI application ChestLink clinical performance study on real-world clinical data.

oxipit.ai

Viz.ai already notifies the stroke team on its own, the radiologist provides the final report with any incidentals.

Deliver neurovascular care with the AI-powered solutions of Viz Neuro. Coordinate care for large vessel occlusion, CT perfusion, and more.

www.viz.ai

Obviously neither of these companies or the dozens of others working in the field are going to replace us tomorrow. But if they get more approval for fully-autonomous final reads, and fully-generated reports that you just hit "sign", that could decrease the number of radiologists needed in the field.

As I said above, I still think we'll have human beings signing studies for the foreseeable future. But there could be a significant decrease in the number of people needed if AI continues to scale exponentially. The counter-point is that maybe regulatory capture, licensing, malpractice, the aging population, increase in imaging utilization, and slowing in pace of AI development all will work together to keep our jobs intact. That's why I'm not 100% certain about either outcome.