- Joined

- Apr 2, 2007

- Messages

- 1,060

- Reaction score

- 118

I haven't posted here in nearly a decade, but the latest breakthroughs in AI/ML deserves an awareness post for future trainees. In 2016, radiologist were aghast when Geoffrey Hinton (one of the pioneers of the current AI era) said, "We should stop training radiologists now." If you were starting a diagnostic radiology residency, including intern year and fellowship, you'd just be in your first year as an attending.

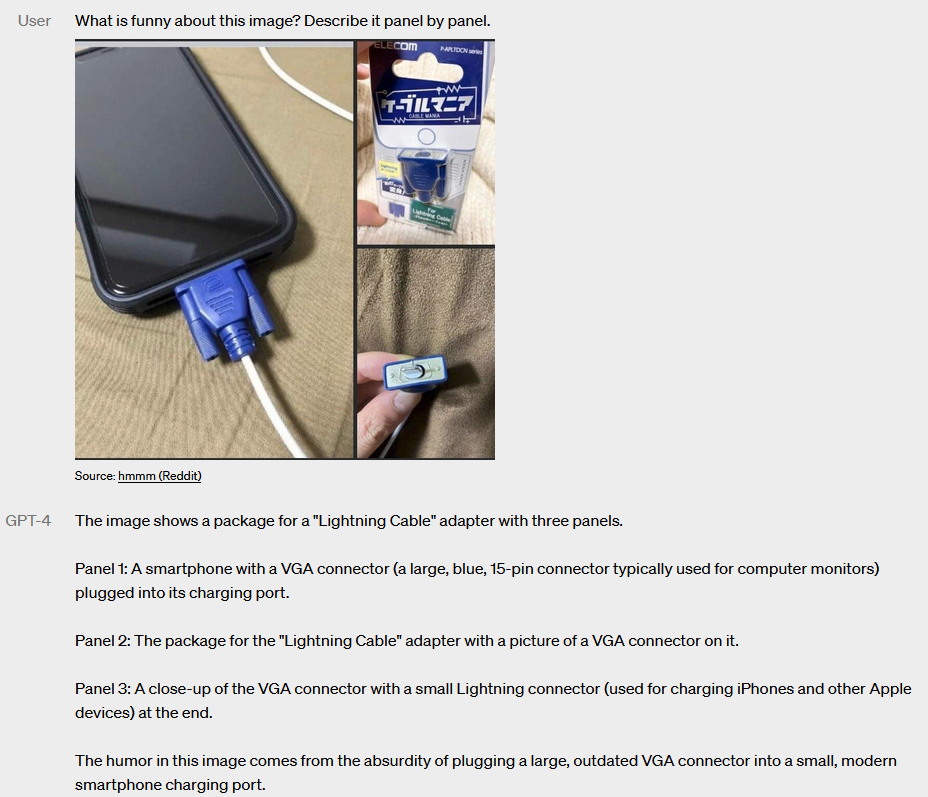

During that time, the AI community has made breakthroughs in image generation, image labeling, text generation, and numerous other areas. Today, GPT-4 was released, and it includes state of the art image-to-text explanation. Here's an example provided by their website:

Source: GPT-4

If you were starting diagnostic radiology residency today, I can't imagine your job will look the same as a what you are being trained to do.

I don't know where the field will go from here, but I would first say that AI/ML (and computer science / mathematics education) should be emphasized for future radiology trainees, and even perhaps at the medical school level.

During that time, the AI community has made breakthroughs in image generation, image labeling, text generation, and numerous other areas. Today, GPT-4 was released, and it includes state of the art image-to-text explanation. Here's an example provided by their website:

Source: GPT-4

If you were starting diagnostic radiology residency today, I can't imagine your job will look the same as a what you are being trained to do.

I don't know where the field will go from here, but I would first say that AI/ML (and computer science / mathematics education) should be emphasized for future radiology trainees, and even perhaps at the medical school level.