- Joined

- Nov 18, 2015

- Messages

- 286

- Reaction score

- 485

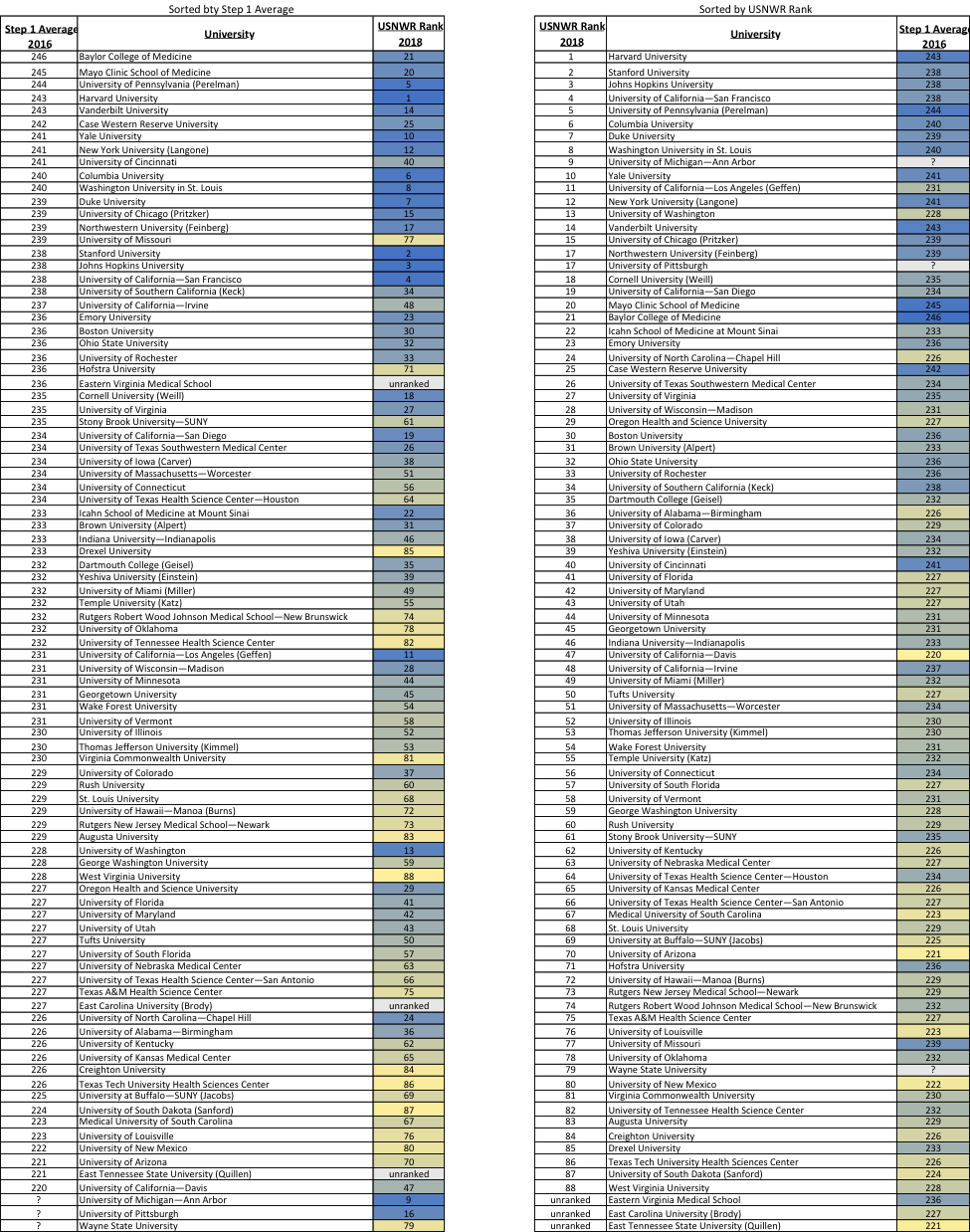

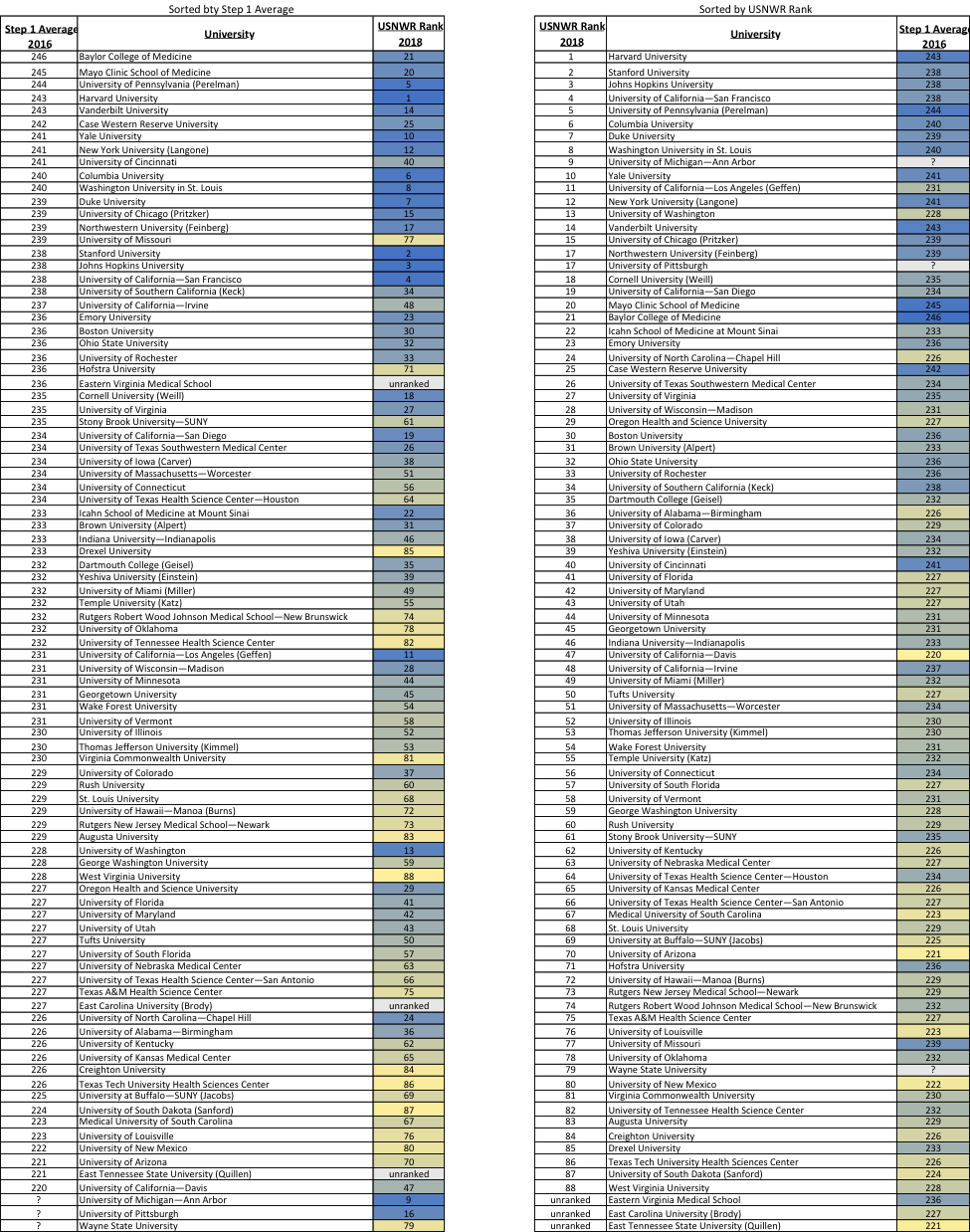

Data: USNWR Research rankings 2018, USNWR reported step 1 average 2016

Hi guys, there is an ongoing reddit thread with a list of school's step 1 averages. S/o to @Serine_Minor for posting each school's average from USNWR data. I took the liberty to organize the data a little bit and thought I'd share it with the larger pre-med community. While USNWR rank isn't a definitive metric for how good a school is or how happy you'll be there, it may be a rough indicator for the type of students that attend those institutions. When you match step 1 average with USNWR rank, there are some interesting outliers in the data. Of course, I'd imagine the standard deviations are pretty high for these averages, so again we can probably only use this as a rough metric.

Comment: Blue = higher score/rank, yellow = lower score / rank

https://i.imgur.com/WjxjMvR.png

Hi guys, there is an ongoing reddit thread with a list of school's step 1 averages. S/o to @Serine_Minor for posting each school's average from USNWR data. I took the liberty to organize the data a little bit and thought I'd share it with the larger pre-med community. While USNWR rank isn't a definitive metric for how good a school is or how happy you'll be there, it may be a rough indicator for the type of students that attend those institutions. When you match step 1 average with USNWR rank, there are some interesting outliers in the data. Of course, I'd imagine the standard deviations are pretty high for these averages, so again we can probably only use this as a rough metric.

Comment: Blue = higher score/rank, yellow = lower score / rank

https://i.imgur.com/WjxjMvR.png